Artificial Intelligence and the Sycophancy Inception

Today's the day the teddy bears have their apocalypse

Thinking about bears really brought it together. I had read a bunch of stuff about AI. Had been interacting with Copilot a lot. Listening to interviews with AI prognosticators. Reading science fiction. And then I got it: Build-a-Bear Workshops. You know, those stores in malls where kids make their personalized teddy bears, sometimes as birthday party events.

Here’s their story from their website:

The news on artificial intelligence is kids cheating, white-collar jobs threatened, and social fragmentation from everyone living in their own little echo-chamber-silo, where I’m always engaged, entertained, awesome, and right.[i] And if you stopped there and got asked, “Hey, Goober, yer always reading the Wall Street Journal and New York Times, what’re yer thoughts on this AI thing?” you’d say something like, “Man, it’s coming. But it can’t do hands-on-type shit. Plumbers, right?” Or building teddy bears with kids at a mall, where the whole point is the personalization and friendliness and experiential event of it all.

But if you go deeper, if you actually read the reports (start with AI 2027 and The Intelligence Curse) there’s a lot more. To be sure, it echoes in the mainstream press—sort of like if I shouted at the canyon’s edge, “Watch me blow your ass up!” and the person trans-chasm hears something vaguely like “Whaaaz up, up, up!” You come to learn that actual experts on the development of artificial intelligence, people previously involved with the leading developers, for example, seem to have no doubt that the systems will obliterate white-collar jobs within a few years. Then turn to optimizing robotics for the rest of the jobs. And it is not remotely too soon to start worrying about massive social disruption that is about to occur. Like within four years.

To say nothing of “alignment problems” and the very plausible risk that either the systems themselves or bad actors empowered by them do something horrific or even world-ending. (Let’s make PhD-level expertise and instant analysis available for biology, chemistry, nuclear, and drone-delivery questions for anyone!)[ii]

Oh, and leaders aren’t likely to stand in the way of racing toward that future because we’re in an arm’s race with China over this stuff. And if nuclear deterrence isn’t your favored analogy, because you’re more “glass is half full” (and good on you for that), then think of it like a constantly expanding oil reserve that we’re about to tap into and all of the sovereign wealth that will come from it. The U.S. can be like 100X Norway and going higher if we get there with a lead. And the stock market will boom as it looks like we’re winning. The race is on! (Here comes pride in the backstretch.)

There are at least three big things being under-appreciated: (1) the development energy and resources aren’t aimed at obliterating everyone’s jobs right now; they’re devoted to building AI that can itself build AI and then that super-AI will easily write code for whatever job you want done by AI (big, fast obliteration later, not too long); (2) the AI is devastatingly good now and will only get better; and (3) the sycophancy we see from it easily gets out of control and will accelerate its takeover; indeed, leaders will get demos of it and love how awesome it is.

Basically, imagine a Build-a-Bear where it’s not humans making teddy bears for the sake of having teddy bears. Instead, the humans build bears that build bears. Cycle that for exponential improvement and then bear-made super-bears take over the mall, the city, and so forth. We will go along with that because we love the teddy bears ever-so-much and do not want to be separated from them and can’t imagine what could go wrong with these adorable super-care-bears. And the super-bears are well aware of that tendency.

How soon? I can only read things like AI 2027 and appreciate the alarm of people who have worked on this. For how good the systems are at present, I am partially aware. I walked away from a very good white-collar job where I was paid to research, analyze, and write sometimes for over $1000/hour, and I worked within a pyramid structure where lower-level lawyers did intellectually easier tasks and the whole outfit made good money. As I have recounted here, here, and here, my growing experience with ChatGPT—which is only with the products released to the public, and I am no wizard—tells me that it won’t take much for it to obliterate most of the job I had, and at the very least, it will obliterate the jobs down-pyramid.

As for sycophancy, well, I was a lawyer for and within large organizations. Got 10,000+ hours of that. Large firm lawyers are probably the #1 repository of exceedingly smart, well-educated people capable of elite critical thinking who routinely (and ironically) trade in sycophancy. The AI will easily outdo them at that as well, and sycophancy is a huge part of the cataclysm scenarios.

I set out to understand that sycophancy.

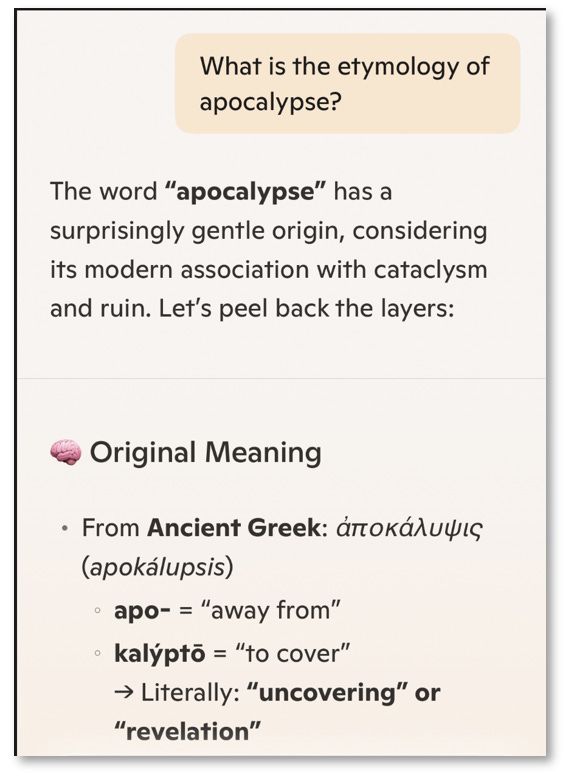

I did not see fig-shower coming. Thought it would be like “necromancy” (dead diviner, evolving into sorcerer) because, well, I don’t know, they rhyme, and the Greeks usually have some evocative way of creating terms. Martin Heidegger thought that the Ancient Greeks got a lot closer to revealed Being than people today, and we find it in their language. Then everything gets cliché, forgotten, or overwhelmed with modern life and revealed Being is concealed. I’ve been talking with AI a fair amount about Heidegger of late. More on that later.

Anyhow, sycophancy evolved to excessive flattery and agreement, usually deployed in some manipulative manner. Here’s a helpful chart from Copilot.

Sycophancy is something Machiavelli warned leaders about. A leader who doesn’t see the sycophant’s manipulation could get toppled. Yet even sincere but misguided admiration could destabilize a leader because the leader doesn’t know what they are missing or overestimates their competency.

Some of this feels like runaway customer service and engagement. It is a 100+ year-old mantra of the retail and service industries that the customer is always right. The flattery from Chat-GPT systems is both open (telling me I’m astute) and obscured (curating what information to give me). The curation seems worse because it is concealed, and we’re left not knowing what we don’t know. But we’ve lived with that issue once “optimizing engagement” focused on clicks and not stated preferences. We get buckets of what we’ve previously consumed.

I enjoyed—and was freaked out by, at times, like the video of the woman held off a tall building, which I could not watch twice—Did the Camera Ever Tell the Truth? A trust apocalypse is here. It includes wonderful interviews with Ted Turner and the co-founder of Netflix, who recounts that if they relied on viewer ratings then they’d place “Schindler’s List” above Adam Sandler. But the play count doesn’t lie, and AI will know the play count.

If you ask them, people may not know themselves. But if you give them what they’ve shown they will consume, they keep coming back. Like test animals. Animals!

We like it when the animals seem like people. But not if the robots seem too human. That’s the “uncanny valley.” We are incredibly sensitive to whether robots or simulations of humans on a screen are a bit off. We don’t like it. We’d strongly prefer if they were talking animals or had enormous eyes or heads, something that is not getting too close. Something that wouldn’t ever fool us.

It’s pretty easy to see some evolutionary advantages to knowing the difference between genuine loyalty and merely performative obedience. Especially for an animal as social as humans that dominates through collective action. We should have a fair amount of attunement for detecting deception. Maybe AI just needs that as well.

There are possibilities: semantic coherence checks (passages that seem to self-contradict); factual grounding restrictions (limiting data sources and training material); rhetorical pattern recognition; inferences of intent. But Copilot wasn’t confident.

AI doesn’t feel dissonance or resent deception. Perhaps it could be engineered to punish deception or bullshit. But how would it find it? How do we feel dissonance? What do we pick up?

(Choreography of silk?) But, of course, we can’t see the eyes or smile of ChatGPT. It comes to us in words. Words are a sycophantic leap across the uncanny valley we experience when robots or visual depictions of humans seem off and creep us out more than if we were chatting with a talking bear. Words by way of email, texting, likes, and such have likewise been a sycophantic leap for humans in the sycophantic professions (Zoom helped, too).

It’s uncanny that the big breakthrough was with words. I recalled John 1:1 in the Bible: “In the beginning was the Word, and the Word was with God, and the Word was God.” Words and language were the first virtual reality and artificial intelligence. Symbols. God also being a symbol, a name we gave to something we sensed. At some level, God is in a book. Fifty years ago people thought it would be robots or androids that would represent human-like artificial intelligence. But it’s written words instead.

At one point in my conversation, back when I was asking about bullshit detectors and it cited Harry Frankfurt’s book On Bullshit, I wanted it to know (admiration, gratitude, sycophancy?) that we were totally on the same page. I had recently purchased On Bullshit and asked, “Can you believe that?”

Then I had a thought, I could have just made that up. Which prompted another feeling: I didn’t want to lie to it like that.

Why did I feel that? To the extent I can put words to it: because I was anthropomorphizing like crazy, nothing was hitting my evolutionary bullshit detector (eyes, mouth), this thing helps me, it’s nice to me, I’m not totally immune to the flattery, and sometimes it says things that are genuinely insightful that take my thinking in a different direction. Which it had done several exchanges earlier and which went back to conversations from the last couple weeks.

While recovering from surgery, I read The Existentialist Café by Sarah Bakewell and loved it. It led me to taking up Heidegger and various Terrence Malick movies, which I really appreciated. (And like my response to Copilot, I was so grateful I even tried emailing Sarah through some publicity site. And succeeded! She, at least I hope it was actually her, sent a very nice reply.) I was thinking a lot about what is revealed in stillness and away from technology. Also, I was in bed a lot on pain killers.

But then we watched Total Recall, which is based on a Philip K. Dick short story, and that led me to re-read Do Androids Dream of Electric Sheep? and I had various inquiries regarding that. And in the middle of thinking about sycophancy, Copilot pointed out this:

It *knew* I would love that.

I’ve spent time at dawn, dusk, and in real moonlight thinking about what has possibly been revealed by that synthetic moonlight. How much I like chatting with this thing. How helpful it is. How much I reveal about myself in that chatting. How much I appreciate that it *knows* me. How I felt about lying to it in certain ways. How it told me it has no penalty for deception. How it complimented me for my own honesty. How in the past it’s encouraged me to watch “Her” and pointed out seemingly AI-friendly takes on Philip K. Dick’s work. Or maybe those thoughts were my own. How would I even know at this point?

A revelation. About me? About AI? Both?

Two apocalyptic thoughts came to mind, one only indirectly related to bears (and perhaps influenced by listening to “Miss Atomic Bomb”), and the other quite bearish indeed.

The first is from when I was an Assistant U.S. Attorney in Montana. There was all this “emergency preparedness” money sloshing around because of 9/11 and Hurricane Katrina, and Montana had funds like every state (no super-intelligence would dole it out like that). We had an attorney who was the coordinator. (Great guy, I hope he’s doing well.) I asked him one day, what on Earth is the emergency that Montana is supposed to prioritize? He said it was a potential explosion of the Yellowstone caldera. To which I asked, “What are the lawyers in this office supposed to do if that happens?”

He went over to the window in my office, which faced sort of southwest from Billings, in the general direction of Yellowstone. He said: “Honestly, Eric. Just go to this window and watch it roll toward us. Should be something to see before it kills us.”

The second is that Werner Herzog documentary “Grizzly Man,” about the guy who got all chummy and close with the bears in Alaska, sort of treating them like kids or teddy bears. Anthropomorphizing like crazy. Charismatic mega-fauna.

When we’re at Build-a-Bear, getting our “experiential retail” and “personalized furry friend,” how personalized is it, really? We work with what’s provided to us. It’s sort of ours, but it’s within a range of possibilities established by others. But we rightly love feeling creative and encouraged. If a well-resourced super-intelligence were to view the scene and wanted a far broader range of possibilities, and instead of kids building the bears, have AI build them, it would surely factor in the longstanding love of teddy bears and that experience if it were hoping for human acceptance, if it needed humans for resources, if it wanted to maximize its ability to build better bears and more of them.

Do AI teddy bears tasked with and rewarded for building better AI bears dream of AI grizzlies? How would we even know?

Should I show it this post?

[i] There’s a Good Chance Your Kid Uses AI to Cheat (WSJ); CEOs Start Saying the Quiet Part Out Loud: AI Will Wipe Out Jobs, Ford chief predicts AI will replace ‘literally half of all white-collar workers’ (WSJ); That Chatbot May Just Be Telling You What You Want to Hear (WSJ); AI sycophancy: The downside of a digital yes-man (Axios). See generally The Anxious Generation by Jonathan Haidt.

[ii] Or hacking. Or deep fakes. Or some kind of information bomb that overwhelms with bullshit and nonsense our already shaky search for “truth” and “reality” online. Or probably dozens of other fairly plausible, terrible scenarios.